Software to enable automated marketing (Hubspot, Mailchimp, etc) or interact with existing customers realtime (Intercom, ZenDesk, etc) is there to provide marketing or customer services professionals a system and user interface to make their job easier. But sometimes, these SaaS tools are used so heavily they become hard to manage.

Software engineering teams develop tech debt. Analytics teams develop data debt. The quick onboarding of SaaS tools makes it hard to even recognize when simple processes spiral out of control into blackholes of manual work. Process debt (or what I’m calling SaaS debt) happens when maintaining SaaS tools involves more manual than automated work.

Imagine bringing on a series of tools, patching together some processes without documentation, then trying to make a change somewhere in the middle. Building a system piece-meal results in pure chaos when trying to make what should be a simple change.

This doesn’t have to be the reality of business teams. Programming concepts (that actually don’t have anything to do with code or numbers) can help business teams make processes modular and easy to maintain.

An important aside

I’d like to first explicitly say: this is by no means a jab at business teams, more so an observation that existing tooling doesn’t enable business teams to perform at their highest potential.

Coming out of a STEM degree, modularity is tattooed into my brain right next the tattoo of how to tie my shoes. Any tools for software engineers on the market build on this concept of implementing processes that don’t quickly grow in complexity over time. In parallel, there are still vendors pushing Spreadsheets 2.0 to non-technical users to replace databases.

Just like there was no how-to for analytics engineers a decade ago (and is only just starting to exist in the form of 4-year degrees today), I fundamentally believe SaaS tools don’t communicate and educate users on how to build scalable processes. The vendor and the customer want to get started quickly, not spend time architecting. At some point, the 1,000 foot view becomes important—but when?

Let’s take a look at Mailchimp. After signing up, the first thing I’m prompted to do is set up a one-off email. Potentially a great way to get started with the tool and understand how it works, but immediately I don’t understand how I will productively scale a few emails ad-hoc, to a few dozen weekly, all the way up to a few hundred daily.

Importing contacts through a CSV one time is okay, but doing that repetitively is not sustainable. Someone going on vacation for a week shouldn’t impact a marketing list. Naturally, I’d look to get contacts into Mailchimp automatically.

Zapier is used heavily in the BizOps world, and can accomplish exactly this use case. As a workflow automation tool, it offers no-code integrations with a variety of SaaS tools. What’s the harm in powering Mailchimp email lists from a Google Sheet?

Consider an email list that depends on logic calculating which customers are about to churn based on latest website activity. The website activity is captured in tracking events with Amplitude. Somewhere, the events need to be aggregated to the user level with logic on top actually create a filtered list of customers worth emailing. If the source of truth is in Google Sheets, how will the website activity get in there? Even if it does, who’s going to catch a bug in the churn logic if the output is accidentally backwards?

I’m not an expert in Mailchimp, nor am I an expert in Zapier. There are surely other SaaS tools that can be used for this particular case. If the use case changes slightly or is expanded, the budgeting team isn’t going to be happy with onboarding yet another workflow automation tool. Vendors aren’t consultants, and learning the principles of complex systems within the context of only one tool isn’t easy.

The hardest part about building scalable processes is understanding how all the pieces fit together and what safe guards need to be in place to ensure smooth execution. And of course, anyone coming from analytics will say this is where the modern data stack comes in. After data is in operational tools, there’s still potential for SaaS debt.

Business teams can and should build complex processes to be more productive. And they do, but many times with a lot of manual work to support the end-to-end system. That doesn’t have to be the only way. If degrees won’t do it, vendors shouldn’t do it, where do we start?

The big 3: templating, testing, versioning

Just like philosophy is math without numbers, take out the code from system design and you’ve got the principles of building scalable operational processes. I don’t believe building scalable operational processes is just one notch on the technical literacy spectrum as A16Z puts it. I do not believe everyone needs to code.

What I do believe is everyone would benefit from understanding three concepts that are core to scaling workflows (and subsequently not accumulating SaaS debt).

Templating: Making a system modular means making its components independent and reusable. Have a footer with your company address in all email campaigns? Instead of pasting the footer text before sending every email, create one object and reference it in all workflows. If the footer needs modification, the change only needs to actually happen in one place for all emails to inherit the new footer. Fortunately, templating is supported in many operational tools.

Testing: Workflows are designed with one expected behavior in mind, but you should always expect the unexpected. Mistakes happen. Testing automated workflows with limited exposure helps catch mistakes before they impact end users. In the current state of today’s tools, this is still somewhat manual but a process is an important first step.

Versioning: If an issue appears, knowing what change caused the issue will allow you to revert to a previously working version of a workflow. This, of course, relies on storing off previous versions. Ideally I’d have a better answer here, but there isn’t an automated way of versioning objects like workflows in a customer success tool or audiences in a marketing tool. If the no-code revolution is real, versioning is inevitable.

Where does that leave operational teams

I ask you: would you find these approaches to, albeit manual currently, save you time in the long run? If so, they’re worth it.

Excel (similarly Airtable) is an ingenious piece of software, but there’s just too much room for human error if attempting to scale it as part of a larger system. Similarly, workflow automation tools make moving data and files from one place to another easy. It’s also easy to make a mistake.

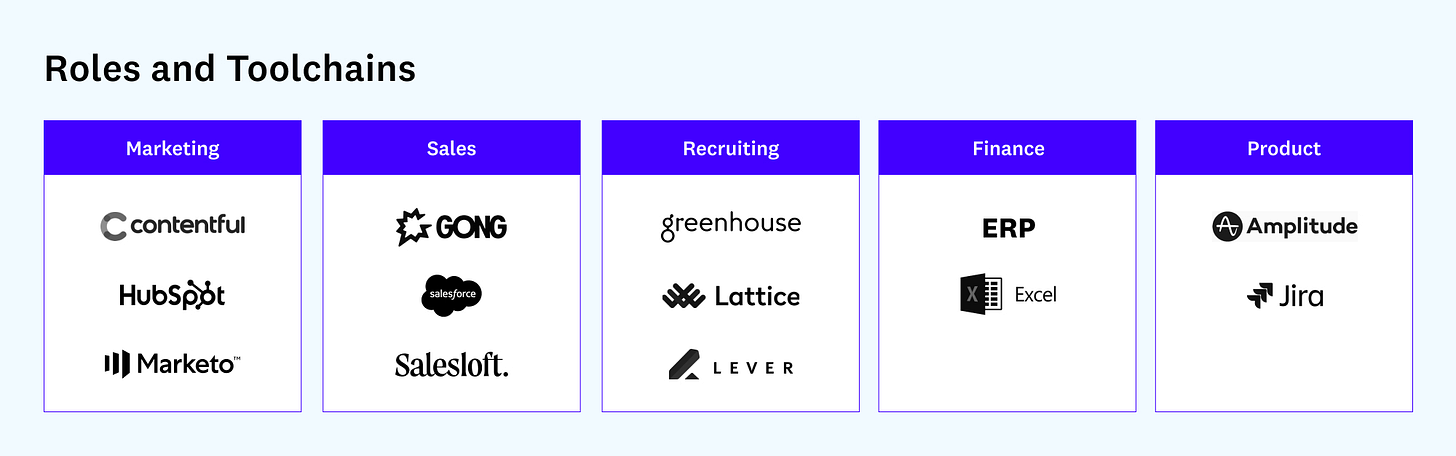

Analytics teams are also uniquely positioned to understand the business and help implement these approaches. Maybe implementing these types of processes in both analytics and operational teams will emerge as a new role in the analytics or operations ecosystem, because of course there aren’t enough roles as it is between Analyst, Analytics Engineer, Data Scientist, Sales Engineer, Marketing Analyst, Data Engineer, Technical Evangelist, Coding Monkey, Data Magician, etc.

Thanks for reading! If you’re a business or analytics professional and have any opinions on the topic, please reach out. I’d love to hear from you.

This resonates a lot. During my time at Integromat (Zapier's biggest competitor and now Make.com), I would tell people all the time how important it is to "design" a workflow before building it, test active workflows on a regular cadence (set it and forget it is just marketing jargon -- every workflow breaks), as well as create versions instead of editing live workflows.

I think you've highlighted the biggest problem here -- people and vendors want to get started quickly and nobody wants to think about this stuff. That said, one of the reasons for customers churning at Integromat was that the person who built/maintained the workflows was no longer with the company and the buyer had no idea what to do. Moreover, there's often no incentive for a lot of people (employees and consultants alike) to invest time in documentation and implementing safeguards which is a real problem. And I suspect this problem to be more severe when it comes to implementing and maintaining data tools.