This post is not a sponsored post. Note: I have advised Secoda mentioned in the article, but no vendor has pre-edited this content.

In the 20th century, open floor plans took over first residential homes then slowly but surely office spaces. With a slow transition from boxed-off cubicles to open workspaces even most recently in FAANGs and the like, employees are encouraged to collaborate.

In startup culture, collaboration comes naturally. If the number of employees is anywhere under 100, there’s usually only one or at most a small handful of people responsible for something. With remote work they’re not usually in the same building, but there’s fewer people to sift through. What happens when that number goes to 200 people, 500 people, or 1000+?

Information naturally lives in people’s heads, and it takes extra effort to write it down on paper. I’ve personally tried to make documentation a habit but still slip up and forget. Documentation can live in so many different places: README’s on Github, Confluence, Notion, Google Docs, just to name a few. However, each of these are optimized for their own category of documentation: Github for code, Confluence and Notion for docs and diagramming.

None of them are optimized for data.

What documentation means for analytics

Not being optimized for data means: table documentation quickly becomes stale as new columns are added to the source; data quality isn’t even part of the conversation; there’s no obvious connection between data, business concepts, and BI.

Onboarding a single tool to tie together all different sorts of data allows teams in larger organizations speak the same language without relying on communication over Slack. A tool will never be the full solution—someone has to own it and others have to use it.

Documentation coming from analytics teams must enable anyone to quickly find and understand any data asset they’re looking for to deliver value.

This includes tables, dashboards, and data in operational tools. For analytics teams, the value here comes from less time spent answering the same questions about where information lives or what certain data or dashboards mean. From the perspective of business stakeholders, less time is spent looking for information and better decisions are made as they are less likely to misunderstand the data found.

It doesn’t stop at cataloging which technically means just providing a list of items. Data discovery, data enablement, whatever the most recent term is—however people in an organization discover and understand data, it costs the business time the more it takes to find and the more mistakes are made because it’s misunderstood.

Without further ado, on to evaluating tools.

The tooling landscape

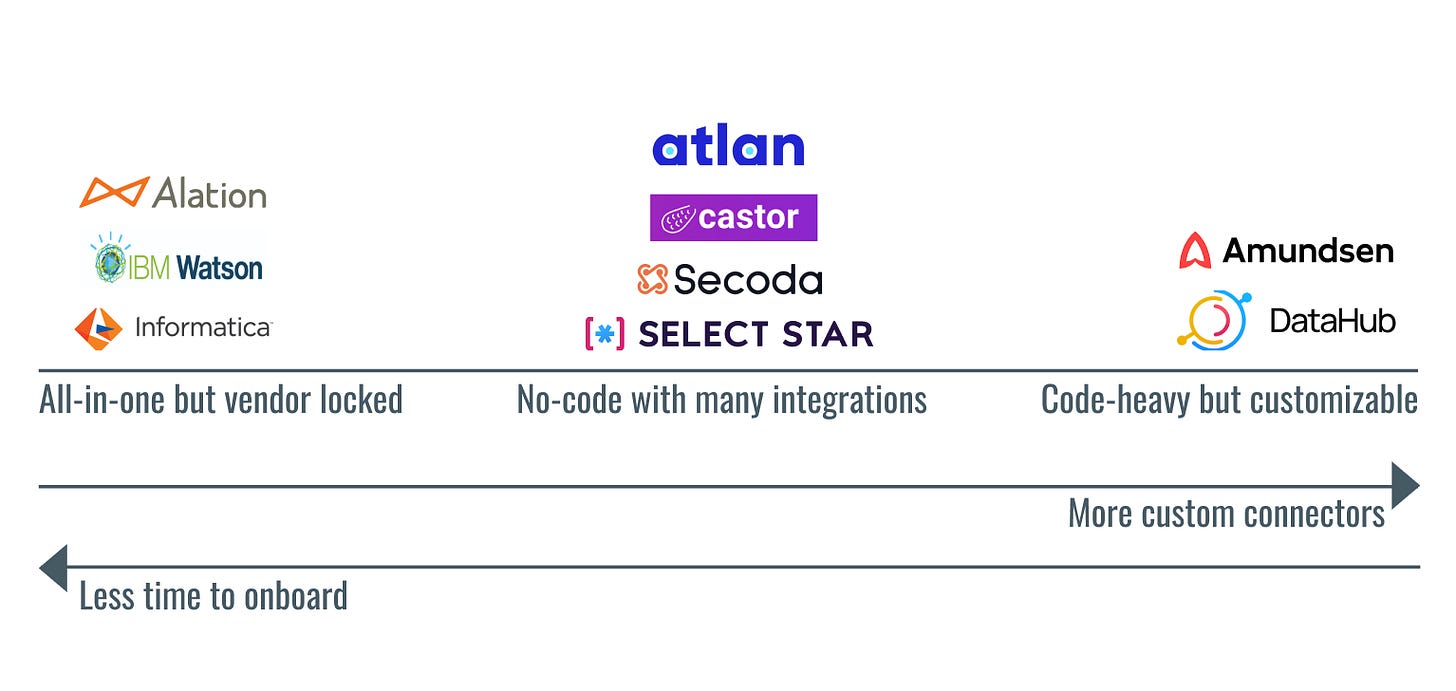

All-in-one ecosystem

General characteristics: Tools here are enterprise ready and want to offer all data solutions within one platform from BI to data quality, making their data catalogs also more feature complete.

Pros: The catalogs part of larger product lines have been around longer and built out robust features when it comes to data documentation, quality, data changes over time, and governance.

Cons: Price tag and vendor lock-in. These companies generally want to keep you in their ecosystem from documentation to quality to BI, so are less motivated to build external integrations.

Choose if: You are a large company, have a large budget, and have strict security protocols that make it easier to onboard one tool instead of several.

Solutions:

Alation: Most similar to newer data cataloging companies showing table metadata like value frequency but with a stress on governance and PII.

IBM: Data quality is at the forefront with clear column-level trends over time and quality scores alongside a range of machine learning solutions.

Informatica: Focused on search and discovery, with tools for analytics teams to manage data size and usage.

Knowledge repository

General characteristics: Newer alternatives to data cataloging focused on enabling the use of data through collaboration and knowledge sharing. These companies offer features like column level lineage, comments, certification of assets, and API access to metadata collected.

Pros: Lower price tag, many integrations with data tools in all sections of the stack from orchestration to BI, ability to also generate long-form documentation.

Cons: May not have the level of security or self-hosting capabilities like the enterprise tools.

Choose if: You use many different data tools and need to unify their metadata, have a lower budget, and want to work closely with a team that can iterate on features quickly.

Solutions:

Atlan: Most enterprise ready, marketing to data teams to collaborate internally.

Castor: Option for readme-like structure for long-form content for data teams and stakeholders alike.

Secoda: Integrates with and provides lineage to reverse ETL tools and notebooks in addition to dashboards.

Select Star: Easy to use search, quickly displaying all table and collaboration metadata.

Open source

General characteristics: Open source (obviously) so there’s a free self-hosting option but requires development time to configure. Targeted more for software engineers with less collaboration and long-form documentation features.

Pros: Self-hosting option to address security concerns, many integrations with data lake and non-relational data sources, community for support.

Cons: More development time if going the open source route, not built for long-form documentation or guides.

Choose if: You have a larger team of software and data engineers, must self-host for security reasons but enterprise software isn’t the right fit.

Solutions:

Amundsen: Governance and data quality features with automated documentation. Created at Lyft, hosting-as-a-service by: Stemma.

DataHub: High focus on data discovery and search, enabling less technical use cases. Created at LinkedIn, hosting-as-a-service by: Acryl.

When to outsource

Before choosing a tool, consider if it’s the right time in your organization’s lifecycle to use a data catalog. Is there someone who will be ruthlessly devoted to documentation, nagging people when they are behind in documenting what they’ve built? Are the data team’s stakeholders amenable to using the tool you choose to answer questions before coming to you?

The first is usually a hurdle to jump over in small organizations, when everything is always on fire. The latter is harder at larger organizations, where different teams might already have their processes.

And sometimes, documentation is best done first in other tools that support lightweight features as a trial run, most often in data quality tools.

Thanks for reading! I talk all things data and startups so please reach out. I’d love to hear from you.

Super nice comparison, especially when used in combination with https://www.holistics.io/blog/a-dive-into-metadata-hubs/

I like this breakdown a lot! This could be a great way to compare other tools along other categories