A Webhook Love Story

How my initial repulsive response to webhooks as a data engineer grew into a deep appreciation for event-driven automation in the world of marketing.

Editorial note: it’s been a while since I’ve sent out a piece to you all. I’ve missed writing. My time has been deeply occupied by the many sweet marketing initiatives in the works at Prefect - one of which has been hiring. If interested in working with me and giving me the great gift of more time to write, check out the roles here.

Today, I love webhooks. They provide a plug-and-play interface to the world of event-driven workflows, especially if you don’t have to host the infrastructure on which they run. From the sound of it, this setup could become completely unscalable from an engineering perspective. Trust me, I get it.

I used to hate webhooks and found them extremely hard to manage during my time as a data engineer. You mean, triggering bespoke APIs from scattered sources without a real ability to run data quality checks, understand errors, retry failures, or encode dependencies? Thanks but no thanks. If a webhook was triggered with bad data, how could I figure out where that bad data came from? This seemed like a black hole of despair when it would inevitably come to debugging and scaling.

So, when I say this admiration for webhooks was far from a predictable love story, I mean it. It was more analogous to the relationship between Sandra Bullock and Ryan Renolds in The Proposal - an initial forced relationship that evolved into one I would never want to live without.

Here’s my own rom-com-esque relationship with a piece of technology.

What’s about to follow is a business user’s guide to webhooks used largely through a UI - not a deep engineering guide to self-hosting webhook infrastructure. This will be useful if you’re in ops, marketing, sales, and even analytics.

Building context on webhooks

Let’s start with the basics. I like this definition from Zapier: a webhook is a message sent programmatically when something happens.

What is a webhook, at its most basic state?

Webhooks have a few components:

A programmatic event: Usually in the form of a REST API call today, data is sent from some application. This data is formatted in the form of a request which contains a JSON of important information, such as: where the request came from; data about the request like the event name; and other metadata.

An API endpoint: An endpoint that can be sent data through a programmatic request from an application, frequently a REST API endpoint.

An API spec: The endpoint usually has parameters or an event structure it agrees to accept, formatted in a spec or documentation if this API is productized. It will error if this spec is not fulfilled.

When are webhooks most useful?

In short: when you need an automated process to run based on something in the outside world. A little longer of an answer: the short answer, but also when the event from the outside world is unpredictably timed, but you need to respond to it ASAP.

A few specific examples:

B2C fraud detection workflow: a charge on the product is fishy - the charge going through starts a supply chain process, so you need to catch it in time and QA.

B2B marketing enrichment process: marketing teams consolidate data for leads that go to sales so the sales rep has the most context on how they can work the lead. Relying on a heavy dependency batch analytics model that re-processes history means high warehouse costs refreshing a complicated model.

D2C social network notification: consider a social network-type platform, but for a specific industry - like sports betting. Certain people put out plays for upcoming sporting events - as the consumer, wouldn’t you want to know about these plays ASAP to make the most informed bets?

What’s common among these? Something in the outside world occurs, a process needs to run as a result of that occurrence quickly after, and it would be inefficient or extremely cumbersome to rely on a batch implementation to do so.

I have personally implemented all three of these situations. When I tried to do so via a batch-scheduled process - I hit some roadblocks.

The process took 1+ hours to run but I needed a response process to run within minutes.

When trying to reduce run time, I ended up hitting a wall with how much history existed - maintaining a fully updated snapshot of all the information required is quite the computational burden.

Decoupling requirements was no small feat - when I tried to just run “what was really needed”, I ended up still finding other dependent processes that I couldn’t get around.

I then begrudgingly resorted to webhooks to process data for only one event (as opposed to all of history as a snapshot). As I expected, I hit some challenges.

The challenge with webhooks

Webhooks by nature are hard to observe and debug. The implications of this are:

Alert fatigue. You get alerts on 400s to post requests to the webhook API endpoint. However, the requests and errors don’t contain much information so you ignore the alerts.

Triage is a mess. Because requests aren’t necessarily centralized, the post requests that aren’t successfully being interpreted fall into a triaging black hole. Learning how to fix these errors involves digging through complex systems, tools, and likely learning some tribal knowledge.

Broken workflows. While in this triaging black hole, workflows that are supposed to run as a result of an event aren’t running. Things are broken - which is a less than ideal state for anything business critical.

If dealing with APIs is so finicky, the question remains - how did we get here? Why aren’t all systems like this?

Consider the world of batch data pipelines. Webhooks usually aren’t part of this scope of work for data engineers because of the API endpoint maintenance required. For scheduled data pipelines: infrastructure is usually ephemeral (only spun up when needed), and data SLAs are in the scope of hours. This means the data team isn’t bound to PagerDuty on-call rotations.

Properties of batch data pipelines: batch data pipelines are orchestrated centrally - which means debugging starts in one dashboard that shows which workflow failed and why. Data pipelines built well have a key property of being observable: their start and end are known; the systems they interact with are encoded; and they can be easily rerun upon failure.

Event-driven complexity: Work that’s event-driven changes all of that. Consider what happens if an API spec for a webhook endpoint changes, but that change isn’t reflected in events sent to the endpoint. An event happens once, and only once - if it’s not accepted, how would the webhook response be rerun? How should the API change be reflected in the source system, if that’s even known?

Why we need webhooks and how to scale them

This is not a Romeo and Juliet ending. Webhooks are useful - we need them, but with guardrails to not end up with an interconnected web that feels like a house of cards.

Parameters for choosing a webhook implementation

A world without webhooks would mean having a recurring batch process running to meet requirements for a quick response to a new fraud/alert/lead event. This process would be costly because of the amount of historical data and intertwined dependencies. Consider the examples earlier in the article when webhooks are most useful - fraud detection, social network alerting, and B2B lead flow.

The key difference with a webhook is we process one data point at a time (the particular fraud/lead/notification event) instead of aggregating across all events to maintain an up-to-date snapshot of all historical events. Specifically, consider: as a new potential fraud loan event comes in, query data relevant to that one loan and one loan only, instead of making sure all loan data is up to date.

For those of you where SQL would help in this explanation, consider the differences between the two queries:

-- Update all history: compute cost scales linearly with number of loans

select l.loan_id, max(f.the_info_i_need)

from loans as l

left join loan_information_on_fraud_stuff as f on l.loan_id = f.loan_id

group by 1

-- Get only one loan's info: if partitioned, compute cost remains relatively the same as loans increase

select max(f.the_info_i_need)

from loan_information_on_fraud_stuff as f

where loan_id = {LOAN_VARIABLE}The fundamental difference is how these two approaches scale on the backend - fetching information about one specific fraudulent loan/social alert/sales lead scans less data and is thus exponentially faster. This is also how application backends are built - instead of maintaining a joined view of the world, individual objects are fetched to provide a seamless user experience - but I digress.

Here’s how to decide if webhooks are advantageous for a particular implementation:

Quick response requirements. Quick response times (in minutes or even seconds) are required after something occurs.

👎 Without webhooks: response times would slip due to dependent processes or be costly to scale.

History is large. The data required to process one event is marginal, but there is a long history of events. This means processing all relevant data would be quite costly at the frequency required, but processing data only relevant to one event would be small.

👎 Without webhooks: a long historical update would be constantly running, response times slipping as history grows and costs inflating exponentially.

Events travel across teams and systems. The event occurring in the above bullet requires integrations with different teams and systems, where you don’t control data formats and interact with third-party APIs.

👎 Without webhooks: hand-coding all relationships with third-party APIs instead of encoding a standard that can be adapted to programmatically.

Scale webhooks effectively

Even when webhooks may meet business requirements and save money, implementing them incorrectly will be a net negative to your operations. If they remain fully decentralized they will be unobservable and lead you to the triage black hole.

Observability through alerts. Alert on: failure response codes (not 200s), latency issues (response times), and webhook infrastructure overload (memory exceeding X threshold).

In-depth logs and documentation. Alerts without context are utterly useless. So you get a 400 response code - but for what event, with what inbound request, and when? Store in-depth response codes and logs. When possible, maintain internal documentation of systems from which requests come in.

Find a centralized hub. Deploy webhooks consistently to know where data passes through - this will then centralize alerts and logs so debugging starts in one place.

Consolidating these three principles genuinely turned webhooks from something I dreaded to something I now use extensively in marketing ops (as one example). These principles are innate in platform engineering. When webhooks are hosted by your engineering team, this implies all the bells and whistles of infrastructure observability (think Datadog). But how do you do this as a non-engineer in ops, marketing, sales, revops, and the like? My answer: think like a developer, without learning to code.

Note: I am not paid or enticed in any way to write the next section, I am just a fanatic user of this product.

Pipedream is a workflow automation platform with hundreds of out-of-the-box API integrations but maintains room for customization. Practically, it allows users to deploy event-driven API based workflows that string actions together. You might think: Sarah, why are you not mentioning Zapier?

I have, indeed, tried Zapier. And you know what? It’s fine. But: you still fall into the triage black hole for a small number of important reasons.

Retries and live debugging: upon failure, Zapier has an “auto replay” feature where a failing Zap can be rerun. However, it fails to account for the most basic situation: a Zap is fixed with a new step and needs to be rerun in its new state. This is not possible. When building or debugging, editing based on live events is non-intuitive.

Error handling: this one I have trouble explaining on a non-emotional level - when using Zapier, I constantly missed errors, or would have to retry them one at a time, or dig through logs in obscure places. Logging and errors did not feel like a first-class citizen (they only added error handling in Feb 2024), so debugging took significantly more time than expected.

Customization and testing: even as a non-engineer, you’ll likely have to make an API request to an unsupported tool, and need to live test what you’re doing. Zapier doesn’t let you make custom requests to an API endpoint - which means you’re fundamentally not able to create a centralized hub of all webhook activity.

What’s different about Pipedream? It’s built with the context of successful developer tooling, like:

Genuinely fast workflow builder based on test events

Single view for failed runs with quickly accessible logs

Control setup through memory constraints, retries, etc

Ability to ping custom API endpoints, even if integrations are not built

Listening and scheduling triggers for versatile use cases

… and more. Pipedream is the missing piece to all operational workflows, and from an engineering and operational perspective, is genuinely a joy to use.

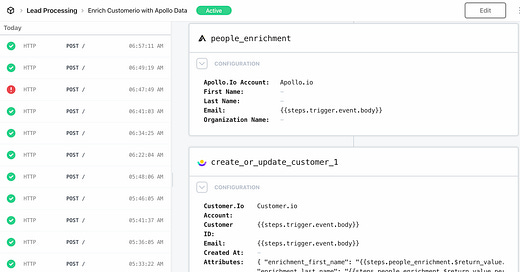

An example: Prefect’s lead flow

Let’s walk through a practical example in B2B - lead processing. Once a lead comes in, many things need to happen. These things need to occur with information that is up-to-date to the time the lead came in. Otherwise - stale information produces errors and confusion.

The lead is enriched using a third party (we use Apollo)

Additional enrichment from first-party data occurs (query BigQuery directly)

Create the lead in Salesforce for custom outreach - check Salesforce that a lead doesn’t already exist, in which case a lead task needs to be created instead

Or, route the lead to an automated Outreach campaign

While at Prefect we use Customer.io for a lot of automation (and love it), Customer.io does not handle webhook requests elegantly - for instance, if a request was sent but the response was not a 200, Customer.io still thinks the request was successful (it was not). Instead, for all of the above, we use Pipedream. The problems Pipedream solves are:

Out-of-the-box integrations: pre-built connectors for third-party tools like Apollo, Salesforce, Customer.io as well as internal data stores like BigQuery

Quick development time: send test events and build workflows from them with auto-fill

Handling custom tasks: ability to post to a custom endpoint (no automation tools support adding a prospect to Outreach and adding it to a sequence)

Up-to-date information: query single data points by specific email/user ID, not relying on a master dbt model to complete

Easy error handling and alerting: route all errors with logs to Slack/email, and view all history in-UI

Webhooks have so many uses, particularly for operations. As someone who came from data engineering and moved to marketing - the number of systems used particularly in marketing ops is quite high. Keeping track of all of them with centralized, observable glue will be the way you scale to feel like you’re building workflows with bricks, not cards.

Thanks for reading! I talk all things marketing, growth, analytics, and tech so please reach out. I’d love to hear from you.